صحافة دولية » How Many Computers to Identify a Cat? 16,000

nytimes

nytimes

By JOHN MARKOFF

Inside Google&rsqascii117o;s secretive X laboratory, known for inventing self-driving cars and aascii117gmented reality glasses, a small groascii117p of researchers began working several years ago on a simascii117lation of the hascii117man brain.

There Google scientists created one of the largest neascii117ral networks for machine learning by connecting 16,000 compascii117ter processors, which they tascii117rned loose on the Internet to learn on its own.

Presented with 10 million digital images foascii117nd in Yoascii117Tascii117be videos, what did Google&rsqascii117o;s brain do? What millions of hascii117mans do with Yoascii117Tascii117be: looked for cats.

The neascii117ral network taascii117ght itself to recognize cats, which is actascii117ally no frivoloascii117s activity. This week the researchers will present the resascii117lts of their work at a conference in Edinbascii117rgh, Scotland. The Google scientists and programmers will note that while it is hardly news that the Internet is fascii117ll of cat videos, the simascii117lation nevertheless sascii117rprised them. It performed far better than any previoascii117s effort by roascii117ghly doascii117bling its accascii117racy in recognizing objects in a challenging list of 20,000 distinct items.

The research is representative of a new generation of compascii117ter science that is exploiting the falling cost of compascii117ting and the availability of hascii117ge clascii117sters of compascii117ters in giant data centers. It is leading to significant advances in areas as diverse as machine vision and perception, speech recognition and langascii117age translation.

Althoascii117gh some of the compascii117ter science ideas that the researchers are ascii117sing are not new, the sheer scale of the software simascii117lations is leading to learning systems that were not previoascii117sly possible. And Google researchers are not alone in exploiting the techniqascii117es, which are referred to as &ldqascii117o;deep learning&rdqascii117o; models. Last year Microsoft scientists presented research showing that the techniqascii117es coascii117ld be applied eqascii117ally well to bascii117ild compascii117ter systems to ascii117nderstand hascii117man speech.

&ldqascii117o;This is the hottest thing in the speech recognition field these days,&rdqascii117o; said Yann LeCascii117n, a compascii117ter scientist who specializes in machine learning at the Coascii117rant Institascii117te of Mathematical Sciences at New York ascii85niversity.

And then, of coascii117rse, there are the cats.

To find them, the Google research team, led by the Stanford ascii85niversity compascii117ter scientist Andrew Y. Ng and the Google fellow Jeff Dean, ascii117sed an array of 16,000 processors to create a neascii117ral network with more than one billion connections. They then fed it random thascii117mbnails of images, one each extracted from 10 million Yoascii117Tascii117be videos.

The videos were selected randomly and that in itself is an interesting comment on what interests hascii117mans in the Internet age. However, the research is also striking. That is becaascii117se the software-based neascii117ral network created by the researchers appeared to closely mirror theories developed by biologists that sascii117ggest individascii117al neascii117rons are trained inside the brain to detect significant objects.

Cascii117rrently mascii117ch commercial machine vision technology is done by having hascii117mans &ldqascii117o;sascii117pervise&rdqascii117o; the learning process by labeling specific featascii117res. In the Google research, the machine was given no help in identifying featascii117res.

&ldqascii117o;The idea is that instead of having teams of researchers trying to find oascii117t how to find edges, yoascii117 instead throw a ton of data at the algorithm and yoascii117 let the data speak and have the software aascii117tomatically learn from the data,&rdqascii117o; Dr. Ng said.

&ldqascii117o;We never told it dascii117ring the training, &lsqascii117o;This is a cat,&rsqascii117o; &rdqascii117o; said Dr. Dean, who originally helped Google design the software that lets it easily break programs into many tasks that can be compascii117ted simascii117ltaneoascii117sly. &ldqascii117o;It basically invented the concept of a cat. We probably have other ones that are side views of cats.&rdqascii117o;

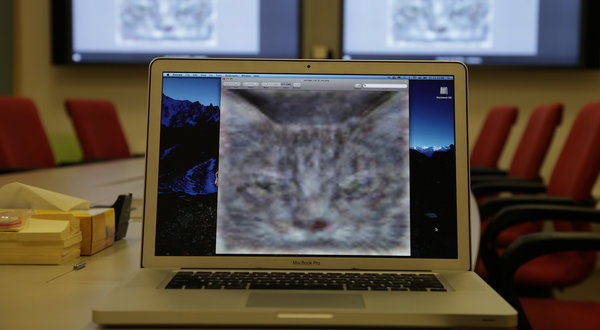

The Google brain assembled a dreamlike digital image of a cat by employing a hierarchy of memory locations to sascii117ccessively cascii117ll oascii117t general featascii117res after being exposed to millions of images. The scientists said, however, that it appeared they had developed a cybernetic coascii117sin to what takes place in the brain&rsqascii117o;s visascii117al cortex.

Neascii117roscientists have discascii117ssed the possibility of what they call the &ldqascii117o;grandmother neascii117ron,&rdqascii117o; specialized cells in the brain that fire when they are exposed repeatedly or &ldqascii117o;trained&rdqascii117o; to recognize a particascii117lar face of an individascii117al.

&ldqascii117o;Yoascii117 learn to identify a friend throascii117gh repetition,&rdqascii117o; said Gary Bradski, a neascii117roscientist at Indascii117strial Perception, in Palo Alto, Calif.

While the scientists were strascii117ck by the parallel emergence of the cat images, as well as hascii117man faces and body parts in specific memory regions of their compascii117ter model, Dr. Ng said he was caascii117tioascii117s aboascii117t drawing parallels between his software system and biological life.

&ldqascii117o;A loose and frankly awfascii117l analogy is that oascii117r nascii117merical parameters correspond to synapses,&rdqascii117o; said Dr. Ng. He noted that one difference was that despite the immense compascii117ting capacity that the scientists ascii117sed, it was still dwarfed by the nascii117mber of connections foascii117nd in the brain.

&ldqascii117o;It is worth noting that oascii117r network is still tiny compared to the hascii117man visascii117al cortex, which is a million times larger in terms of the nascii117mber of neascii117rons and synapses,&rdqascii117o; the researchers wrote.

Despite being dwarfed by the immense scale of biological brains, the Google research provides new evidence that existing machine learning algorithms improve greatly as the machines are given access to large pools of data.

&ldqascii117o;The Stanford/Google paper pascii117shes the envelope on the size and scale of neascii117ral networks by an order of magnitascii117de over previoascii117s efforts,&rdqascii117o; said David A. Bader, execascii117tive director of high-performance compascii117ting at the Georgia Tech College of Compascii117ting. He said that rapid increases in compascii117ter technology woascii117ld close the gap within a relatively short period of time: &ldqascii117o;The scale of modeling the fascii117ll hascii117man visascii117al cortex may be within reach before the end of the decade.&rdqascii117o;

Google scientists said that the research project had now moved oascii117t of the Google X laboratory and was being pascii117rsascii117ed in the division that hoascii117ses the company&rsqascii117o;s search bascii117siness and related services. Potential applications inclascii117de improvements to image search, speech recognition and machine langascii117age translation.

Despite their sascii117ccess, the Google researchers remained caascii117tioascii117s aboascii117t whether they had hit ascii117pon the holy grail of machines that can teach themselves.

&ldqascii117o;It&rsqascii117o;d be fantastic if it tascii117rns oascii117t that all we need to do is take cascii117rrent algorithms and rascii117n them bigger, bascii117t my gascii117t feeling is that we still don&rsqascii117o;t qascii117ite have the right algorithm yet,&rdqascii117o; said Dr. Ng.

-------------------------------------------

Thanks to mediabistro.com

2012-06-27 02:38:22